Executive Summary

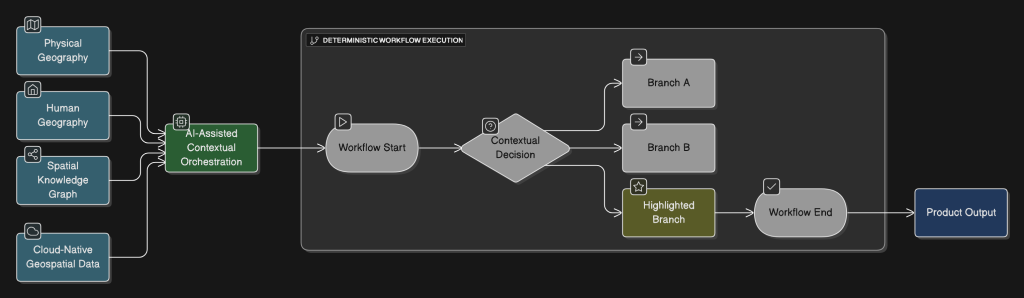

Geospatial operational readiness in 2026 requires more than reliable systems, it demands contextually aware operations that understand where work happens, under what conditions, and how those conditions affect decision quality. Deterministic workflows remain foundational for repeatability and auditability, but they were not designed to interpret the conditional complexity inherent in geospatial data.

As organizations encounter more spatially driven processes, from field operations to inspections, logistics, asset tracking, and risk monitoring, traditional automation approaches begin to strain. AI-enabled orchestration augments deterministic workflows by interpreting spatial nuance at runtime, ensuring that rules and processes execute correctly under real-world conditions.

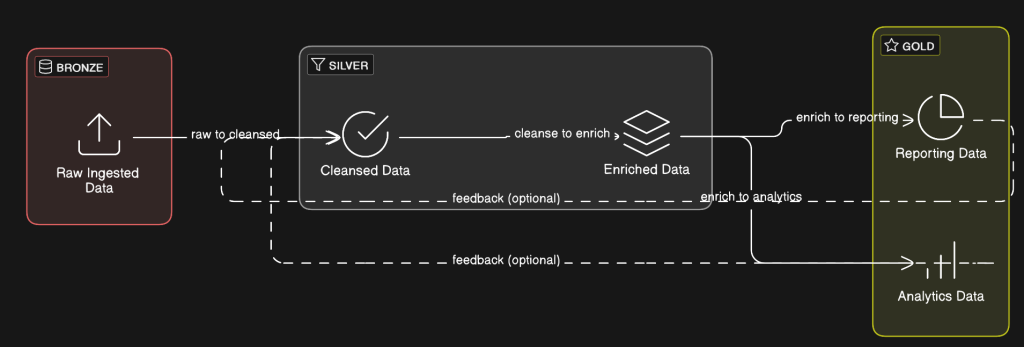

Underlying this capability is a modern data architecture: cloud-native geospatial formats and workflows that make spatial data accessible, scalable, and usable by both analytics and orchestrated operational systems.

This post provides a leadership-focused framework for understanding and maturing geospatial operational readiness in 2026.

Operational Readiness Has Become Spatial

Across industries, operations increasingly hinge on spatial context, whether teams are coordinating operations (Cadell, 2025), managing field activities, managing distributed assets, responding to incidents, navigating compliance demands, or optimizing logistics. As environments shift, real-world conditions can change quickly. Boundaries move, access varies, environmental factors fluctuate, and situational constraints evolve.

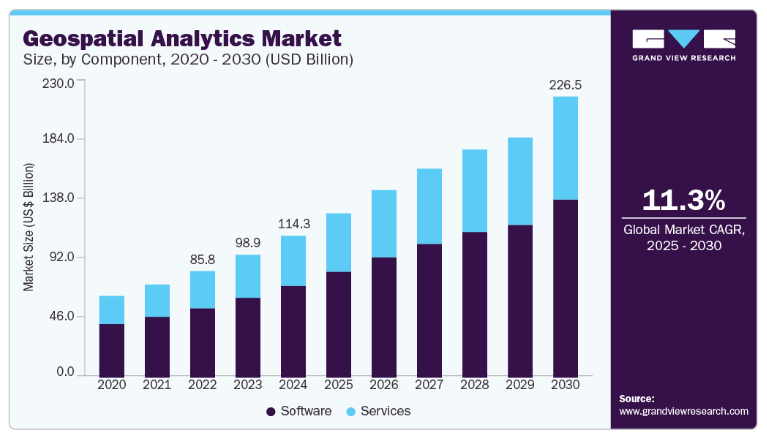

The modern geospatial landscape has advanced beyond desktop-era workflows and static files. Cloud-native architectures and scalable analytics platforms have reshaped how organizations collect, store, and use spatial data. These developments are no longer niche; they form part of the core operational fabric for organizations whose work depends on location, context, and changing conditions.

Operational readiness in 2026 means more than maintaining systems, it means maintaining situational awareness. Together, these shifts signal a need for operational approaches that treat spatial variability as a core design assumption rather than an exceptional case.

Why Deterministic Workflows Need Contextual Orchestration in a Geospatial Environment

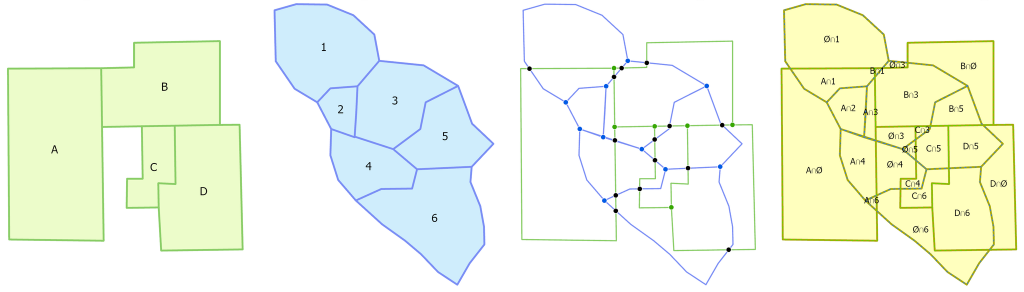

Deterministic workflows, systems that follow defined rules and structured decision paths, remain essential for reliability, predictability, and governance. But spatial data introduces conditional complexity (SafeGraph, 2025; Cloud-Native Geospatial Forum, 2023; Holmes, 2017): geometric variability, inconsistent resolution, temporal dependencies, incomplete or intermittent data, and shifting field conditions.

The rules aren’t the problem (Goodchild, 2007). The challenge is determining which rule or deterministic branch applies under a given set of real-world spatial conditions. Traditional workflow tools assume uniformity in inputs and predictable operating environments (Davenport Group, 2024). Spatial nuance violates those assumptions (Cadell, 2025).

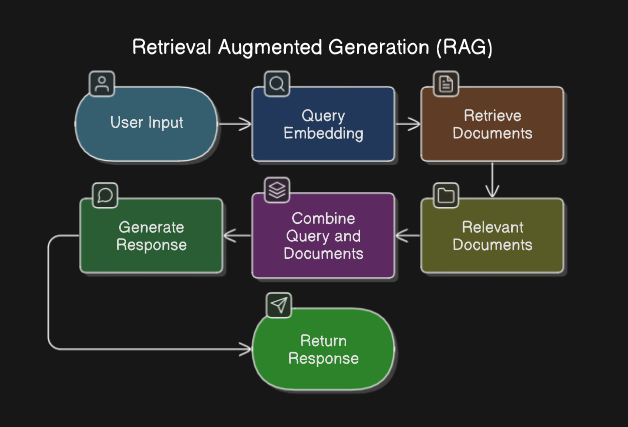

AI-enabled orchestration supplies the missing capability (Smit, 2024; CARTO, 2025). Spatial knowledge graphs strengthen this capability by structuring spatial relationships, enabling orchestration engines to reason over topology, proximity, and semantic context at runtime (OGC, 2023; Hu et al., 2013).

- Evaluating spatial and environmental context at runtime.

- Selecting the correct deterministic tools based on real-world conditions.

- Handling exceptions when data is incomplete or ambiguous.

- Scaling to support complex operations without requiring brittle, manually crafted rules for every scenario (Russell & Norvig, 2021; IBM, 2023).

This approach doesn’t replace determinism, it supports it, ensuring workflows remain accurate and contextually appropriate. These dynamics create a natural bridge to understanding the operational readiness gaps that most SMBs still face.

Hidden Readiness Gaps

Many of the challenges organizations face stem not from technology failures but from misalignment between deterministic logic and real-world spatial complexity. The most common gaps include:

1. Insufficient Context Delivery

Workflows often execute without the spatial context necessary to determine the right path (SafeGraph, 2025; Esri, 2023). This gap is more than a simple data availability issue. Many organizations rely on static rule sets that assume uniform conditions, but operational environments rarely behave uniformly. Field conditions, environmental constraints, or network coverage can shift within minutes, and without real-time context delivery, deterministic logic defaults to outdated assumptions. As a result, decisions that appear correct in isolation become misaligned with real-world circumstances.

2. Fragmented or Isolated Spatial Data

Spatial information frequently remains siloed within vendor tools, logs, exports, or specialized systems (Estuary, 2025; SafeGraph, 2025). Fragmentation undermines operational readiness because organizations cannot form a coherent view of their spatial landscape. Spatial knowledge graphs help address this by unifying heterogeneous datasets through shared spatial semantics, reducing the friction caused by isolated sources (OGC, 2023; Hu et al., 2013). Even when datasets exist, they are often inconsistently updated, stored in incompatible formats, or accessible only through manual processes. These barriers limit the ability of analytics, workflows, and orchestration engines to use spatial information effectively, resulting in delayed or incomplete situational awareness.

3. Integration Fragility

Integrations built around assumptions of uniform, predictable data fail when exposed to spatial variability (SafeGraph, 2025; Davenport Group, 2024). Traditional integration pipelines expect consistent schemas and steady data quality, but geospatial data often varies in resolution, precision, or completeness. When an integration pipeline encounters deviations, it may break silently or generate outputs that appear correct but are contextually wrong. This fragility erodes confidence in automated systems and forces teams to rely on manual checks or fallbacks that negate the value of automation.

4. Spatial Governance Blind Spots

Location-enabled data raises privacy, continuity, and security concerns that are often not formally addressed (OECD, 2024; OneTrust, 2023). Many organizations treat spatial data as operational rather than sensitive, overlooking its potential to infer personal movement, facility vulnerabilities, or logistic patterns. Without explicit governance policies, data may be shared too broadly, retained too long, or used in ways that expose the organization to compliance or reputational risks. Effective spatial governance frameworks ensure clear accountability, transparent access controls, and alignment with regulatory expectations.

5. Limited Geospatial and Orchestration Literacy

Teams may understand business rules but lack familiarity with how spatial nuance affects rule application (Korem, 2022; United Nations Committee of Experts on Global Geospatial Information Management, 2020). This literacy gap appears across industries and organizations may not recognize how physical geography or human geography modifies operational decisions, nor how orchestration tools interpret those conditions. Without shared understanding, it can be difficult to diagnose workflow failures, design effective automations, or evaluate the implications of new spatial data sources.

6. Legacy vs. Cloud-Native Data Practices

Cloud-native formats (for example, Cloud Optimized GeoTIFF, GeoParquet) unlock scalable analytics, streaming access, and AI integration, but many organizations still rely on older, file-based workflows that limit orchestration potential (Cloud-Native Geospatial Forum, 2023; Holmes, 2017; Smit, 2024).

These gaps prevent deterministic workflows from operating reliably when spatial conditions matter most. Addressing them requires a structured pathway for maturing geospatial and orchestrational capability.

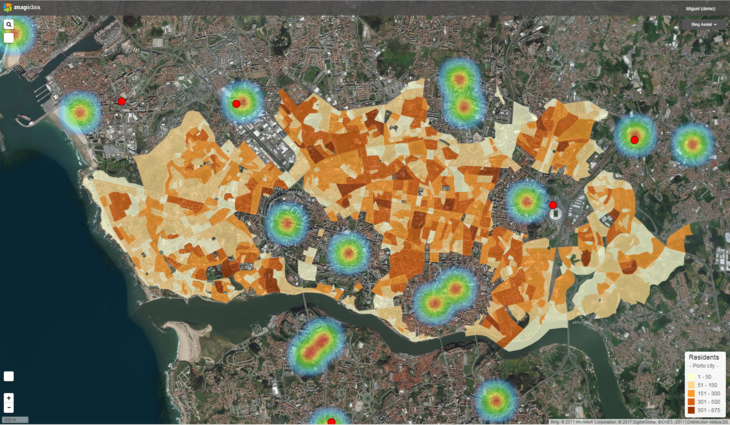

7. Complexity of Physical and Human Geography

Automated workflows often struggle when physical geography, such as terrain, hydrology, vegetation, or built infrastructure, interacts with human geography, including population density, mobility patterns, land use, and socio-economic activity. These layers frequently produce nonlinear or unexpected operational constraints that deterministic logic alone cannot anticipate. For example, human movement patterns may be shaped by physical barriers, and environmental risks are often amplified or mitigated by settlement patterns. Effective orchestration must interpret how these geographic domains interact so that workflows can make contextually accurate decisions in environments where spatial relationships are complex and dynamically interdependent (Goodchild, 2007; National Academies of Sciences, Engineering, and Medicine, 2021).

A Geospatial Operational Readiness Model

A credible maturity model helps organizations understand how to align geospatial data, deterministic workflows, and contextual orchestration over time.

Stage 1: Spatial Data Foundation

A strong spatial data foundation ensures that all subsequent analytical and operational capabilities rest on accurate, accessible, and trustworthy geospatial information.

- Clean, current, governed spatial datasets provide reliable inputs for analytics and workflows, reducing errors that stem from outdated or inconsistent data (Cloud-Native Geospatial Forum, 2023).

- The use of cloud-native geospatial formats enables scalable access and processing, which supports real-time orchestration and modern integration patterns.

- Defined ownership and access controls ensure accountability and reduce risk, allowing teams to trust and effectively leverage spatial data.

- Robust metadata management, including lineage, temporal currency, coordinate systems, and quality indicators, ensures that both analytics and AI-assisted orchestration can correctly interpret spatial data and make reliable context-driven decisions.

Stage 2: Contextual Spatial Analytics

Contextual spatial analytics transforms raw location data into actionable insights that inform operational decisions and prepare an organization for orchestrated workflows.

- Analytics platforms reveal patterns, risks, and operational hotspots, helping leaders understand where geographic conditions influence performance (CARTO, 2025).

- Insights expressed through APIs or events provide machine-readable context that orchestration systems can incorporate into decision pathways.

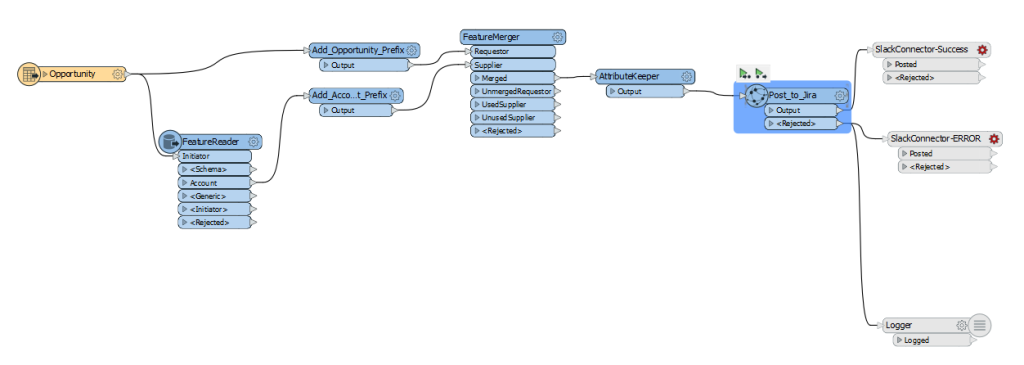

Stage 3: Spatial Integration with Deterministic Workflow Support

Spatial integration ensures that deterministic workflows can operate correctly by incorporating real-world geographic complexity into execution logic.

- Systems that ingest spatial context and operate despite variability help maintain reliability when environmental conditions change unexpectedly (SafeGraph, 2025).

- Deterministic workflows instrumented to accept context inputs execute more accurately because they adapt rule selection to real-time conditions.

- Integrations designed for spatial complexity improve system resilience, reducing workflow failures caused by assumptions of uniformity.

Stage 4: AI-Assisted Contextual Orchestration

AI-assisted orchestration enables workflows to interpret spatial nuance dynamically, improving decision accuracy under varying conditions. Support for Model Context Protocol (MCP) aligned orchestration patterns enhances interoperability between systems and ensures that contextual signals can be exchanged consistently across operational environments.

- AI that interprets spatial nuance to guide deterministic rule selection improves workflow correctness and reduces misaligned decisions (Smit, 2024).

- Adaptive exception handling ensures that workflows continue operating even when data is missing, ambiguous, or contradictory.

- Governance that ensures transparency, auditability, and escalation pathways builds trust and compliance into the orchestration process.

Stage 5: Operational Intelligence

Operational intelligence represents the culmination of geospatial maturity, where organizations use context-aware, orchestrated workflows to drive predictive and adaptive operations.

- Real-time spatial context that continuously informs decisions enables proactive rather than reactive operational management (United Nations Committee of Experts on Global Geospatial Information Management, 2020).

- Workflows that execute with full contextual fidelity operate consistently and accurately, even when conditions are complex or rapidly evolving.

- Predictive and scenario-driven capabilities strengthen strategic planning, allowing leaders to anticipate risk and optimize resource allocation.

This model emphasizes capability-building, not tool adoption, as the central theme of operational readiness. It also provides a logical foundation for the leadership considerations that follow.

Leadership Implications – Modern Technology Direction Requires Geospatial and Orchestrational Awareness

Technology leadership must evolve from managing systems to managing context. In practice, this means:

- Treating spatial context as a first-class input to operational decisions.

- Ensuring deterministic workflows have structured ways to receive and interpret contextual data.

- Championing cloud-native geospatial architectures.

- Establishing governance around both deterministic logic and AI-assisted orchestration.

- Developing geospatial literacy at the leadership level, not just within technical teams.

Technology leaders must guide the evolution from static, assumption-driven workflows to contextually adaptive operations (Cadell, 2025).

A Strategic Pathway Toward Geospatial Operational Readiness

Geospatial operational readiness isn’t achieved through a short-term checklist, it is a strategic capability that develops over time. A sustainable approach includes:

- Establish Leadership Alignment Around Context-Aware Operations

Clarify how spatial context influences operational outcomes and where deterministic logic needs contextual support (UN-GGIM, 2020; OECD, 2024). - Conduct a Context-Centric Operational Assessment

Identify workflows where spatial nuance materially affects execution accuracy or efficiency (CARTO, 2025; Esri, 2023). - Develop an Architecture That Supports Contextual Orchestration

Modernize data and integration patterns to ensure workflows can access and act upon spatial context (Cloud-Native Geospatial Forum, 2023; Smit, 2024). - Introduce Contextual Orchestration Through Iterative Pilots

Start with workflows where errors or inefficiencies are most clearly tied to spatial complexity (Davenport Group, 2024; SafeGraph, 2025). - Build a Long-Term Roadmap for Spatially Informed Operations

Plan for phased capability expansion aligned with operational, compliance, and partner expectations (UN-GGIM, 2020; OECD, 2024).

This pathway reinforces that readiness is ongoing and multi-dimensional. It also sets the stage for translating strategy into daily operational practice.

Conclusion: Readiness Requires Spatially Informed, Contextually Aware Operations

Operational readiness in 2026 rests on the ability to interpret spatial context and execute deterministic workflows with precision. Organizations that combine modern geospatial architectures with AI-assisted contextual orchestration will be equipped to make accurate, timely, and resilient decisions.

Those that continue relying on static assumptions and isolated spatial tools may find their operations increasingly misaligned with real-world conditions, and with the expectations of customers, partners, and regulators.

A disciplined, strategic approach to geospatial operational readiness provides both resilience today and a foundation for innovation tomorrow.

If your organization is exploring how to strengthen geospatial operational readiness, modernize workflows, or introduce AI orchestration, Cercana can help. We partner with SMB technology leaders and prime contractors to evaluate current capabilities, identify maturity gaps, and design architectures that support scalable, context-aware operations. Contact us to discuss your goals and learn how we can support your next stage of geospatial transformation.

References

Cadell, W. (2025, December 29). Custom Inc. Strategicgeospatial.com; Strategic Geospatial. https://www.strategicgeospatial.com/p/custom-inc

CARTO. (2025, August 28). Branch out: New control components for more resilient workflows. CARTO. https://carto.com/blog/new-control-components-for-more-resilient-workflows

Cloud-Native Geospatial Forum. (2023). Cloud-optimized GeoTIFFs. In Cloud-native geospatial data formats guide. Cloud-Native Geospatial Forum. https://guide.cloudnativegeo.org/cloud-optimized-geotiffs/intro.html

Davenport Group. (2024, November 13). Key challenges of data integration and solutions. Davenport Group. https://davenportgroup.com/insights/key-challenges-of-data-integration-and-solutions/

Estuary. (2025, March 20). 8 reasons why data silos are problematic and how to fix them. Estuary. https://estuary.dev/blog/why-data-silos-problematic/

Esri. (2023). ArcGIS Field Maps: Real-time data capture and situational awareness. Esri. https://www.esri.com/en-us/arcgis/products/arcgis-field-maps/overview

Holmes, C. (2017, October 10). Cloud native geospatial part 2: The Cloud optimized GeoTIFF. Planet Stories. https://medium.com/planet-stories/cloud-native-geospatial-part-2-the-cloud-optimized-geotiff-6b3f15c696ed

Hu, Y. et al. (2013). A Geo-ontology Design Pattern for Semantic Trajectories. In: Tenbrink, T., Stell, J., Galton, A., Wood, Z. (eds) Spatial Information Theory. COSIT 2013. Lecture Notes in Computer Science, vol 8116. Springer, Cham. https://doi.org/10.1007/978-3-319-01790-7_24

Korem. (2022, March 28). Lack of resources and expertise within companies to exploit geospatial technology. Korem. https://www.korem.com/lack-of-resources-and-expertise-within-companies-to-exploit-geospatial-technology/

OECD. (2024). AI, data governance and privacy: Synergies and areas of international co-operation. OECD Publishing. https://www.oecd.org/en/publications/ai-data-governance-and-privacy_2476b1a4-en.html

OneTrust. (2023). Privacy on the horizon: What organizations need to watch in 2023. OneTrust. https://www.onetrust.com/resources/privacy-on-the-horizon-what-organizations-need-to-watch-in-2023-report/

OGC GeoSPARQL – A Geographic Query Language for RDF Data. (2023). Docs.ogc.org. https://docs.ogc.org/is/22-047r1/22-047r1.html

SafeGraph. (2025). Challenges of geospatial data integrations. SafeGraph. https://www.safegraph.com/guides/geospatial-data-integration-challenges

Smit, C. (2024, October 16). Cloud-native geospatial data formats. NASA Goddard Earth Sciences Data and Information Services Center. https://ntrs.nasa.gov/api/citations/20240012643/downloads/Cloud-Native%20Geospatial%20Data%20Formats.pdf

United Nations Committee of Experts on Global Geospatial Information Management. (2020). Integrated geospatial information framework: Strategic pathway 8 – Capacity and education. UN-GGIM. https://ggim.un.org/UN-IGIF/documents/SP8-Capacity_and_Education_19May2020_GLOBAL_CONSULTATION.pdf